Cracking A/B Testing Interviews

A behind-the-scenes look at how top product data teams evaluate A/B testing interviews. Learn how to think in terms of intent, metrics, and decisions — not just analysis.

A/B testing interviews look simple on the surface, but they are designed to test how you think — not how well you remember statistics. This session breaks down what interviewers are actually evaluating when they ask experimentation questions, and why many strong candidates fall short.

👋 Hey! This is Manisha Arora from PrepVector. Welcome to the Tech Growth Series, a newsletter that aims to bridge the gap between academic knowledge and practical aspects of data science. My goal is to simplify complicated data concepts, share my perspectives on the latest trends, and share my learnings from building and leading data teams.

This newsletter is based on insights from a live session led by Siddarth Ranganathan, Director of Data Science at Microsoft, and Banani Mohapatra, Senior Manager of Data Science at Walmart.

Between them, they bring decades of experience building experimentation systems, leading product-facing data science teams, and hiring data scientists across seniority levels. The patterns shared here reflect how real interviewers evaluate A/B testing answers in high-impact product organizations.

Author Introduction

Banani is a seasoned data science product leader with over 12 years of experience across e-commerce, payments, and real estate domains. She currently leads a data science team for Walmart’s subscription product, driving growth while supporting fraud prevention and payment optimization. She is known for translating complex data into strategic solutions that accelerate business outcomes.

Siddarth has 20+ years experience across Tech, Healthcare, and eCommerce. He is currently leading a team of 25+ including Data Scientists and Product Managers to deliver high impact initiatives for Azure.

The Framework That Separates Strong Answers from Weak Ones

When interviewers ask an A/B testing question, they aren’t looking for a formula. They are looking for structured thinking that leads to a decision. Strong candidates consistently follow a clear mental framework — even if they don’t explicitly label it.

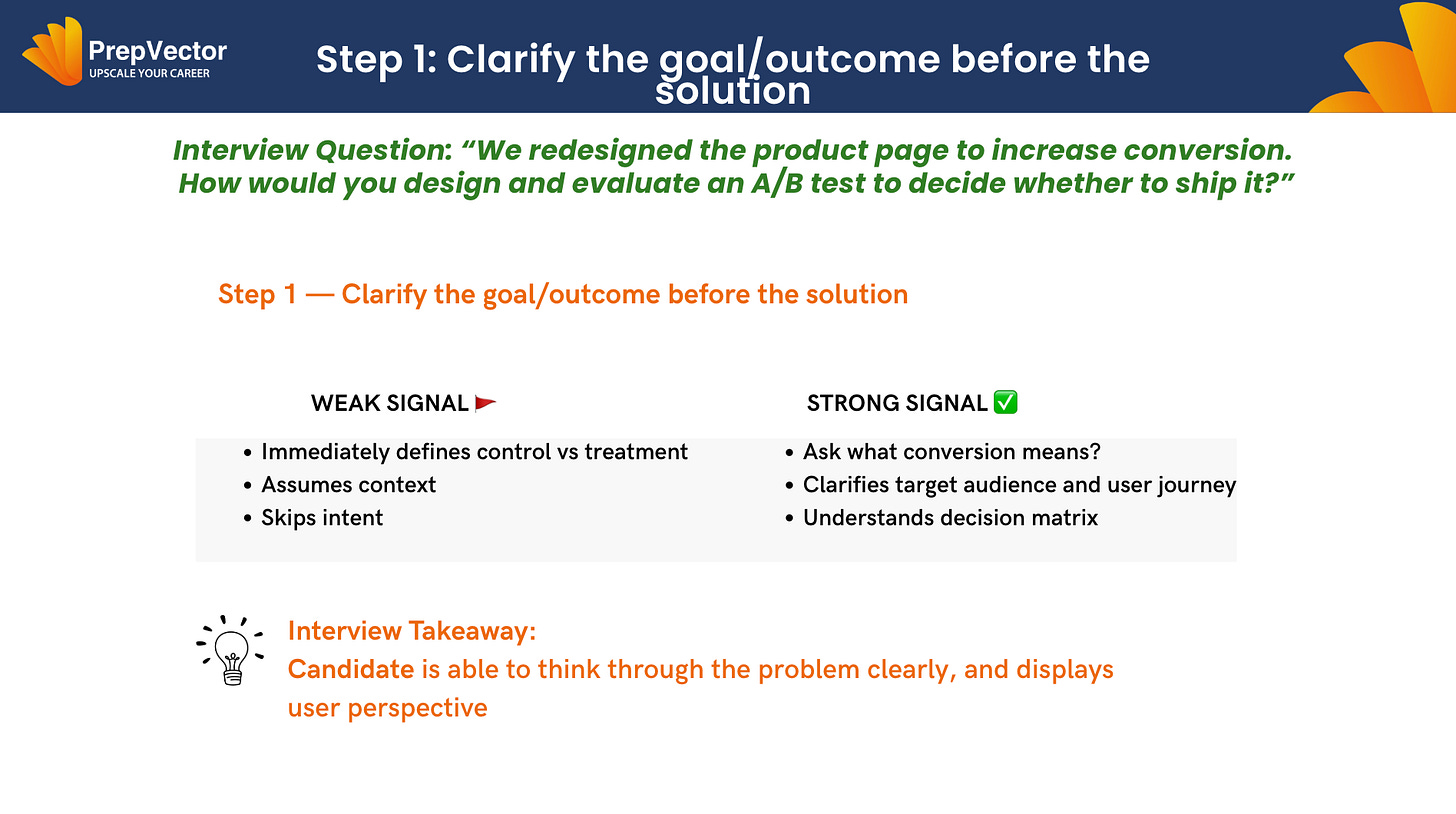

1. Clarify the Goal Before the Solution

Every strong A/B testing answer starts by understanding why the experiment exists. This step is about clarifying the intended outcome, the user context, and the decision the experiment is meant to unlock.

🔴 Weak Signal:

Immediately Defines Control vs Treatment

“I’d split users into control and treatment…”

“The control will see the old page, treatment the new one…”

Assumes Context

“Conversion here probably means signups.”

“This is likely for new users.”

🟢 Strong Signal: What hireable candidates do early

Clarifies what “conversion” actually means

Signup? Purchase? Add-to-cart? Downstream revenue?Understands who the change affects and where it sits in the funnel

New vs returning users, discovery vs completionAsks what decision the experiment must support

Ship / no-ship, partial rollout, timing or revenue constraints

Why it matters

They anchor on intent and decision-making, not mechanics.

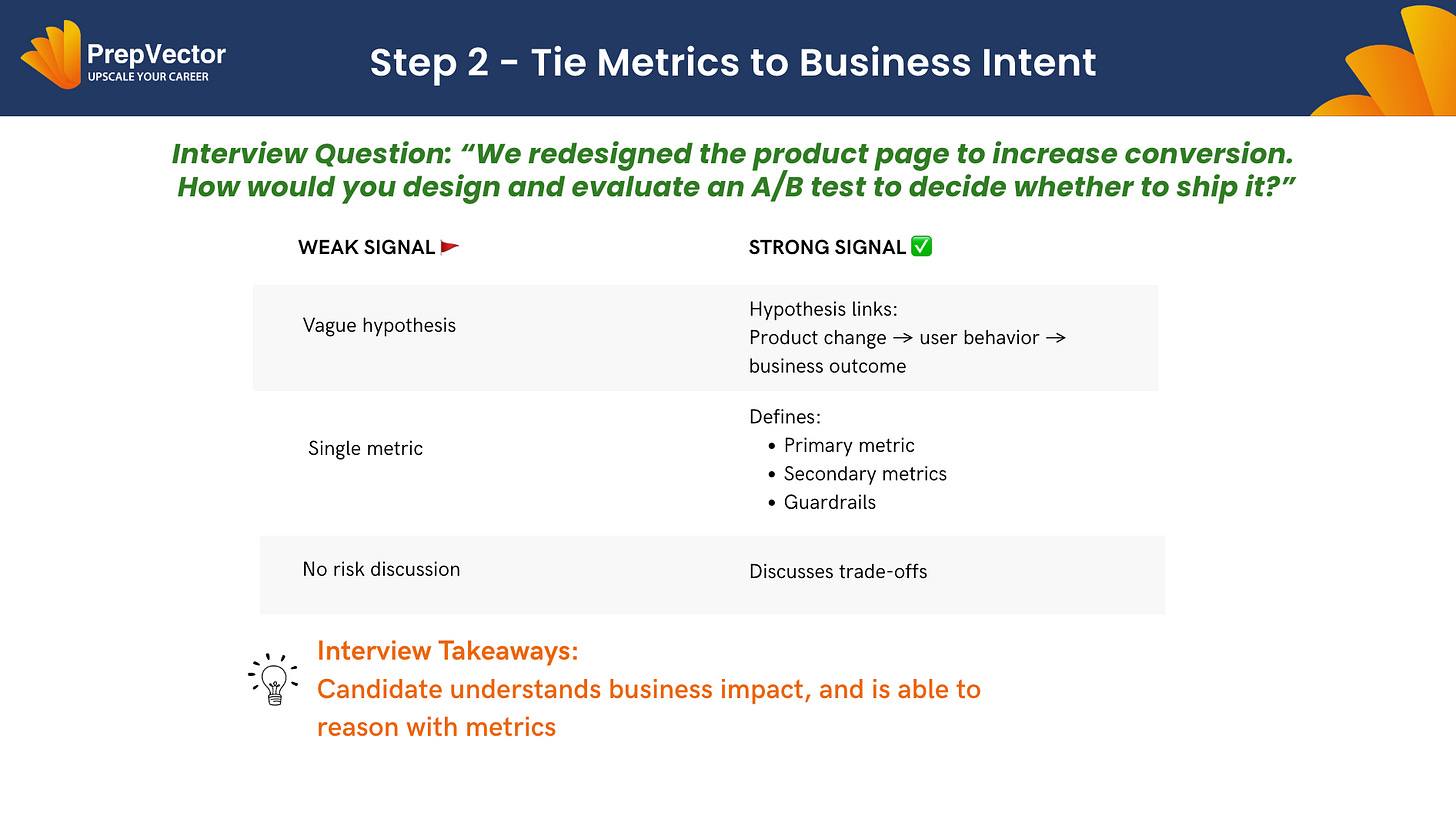

2. Tie Metrics to Business Intent

Once the goal is clear, the next step is translating intent into measurable outcomes. This is where candidates demonstrate whether they understand how metrics drive decisions. They define a primary metric, supporting metrics, and guardrails, and explain the trade-offs involved. Metrics are framed as tools for decision-making, not just numbers to report.

🔴Weak Signal:

Vague Hypothesis

“The redesign will increase conversion.”

“Users will respond better to the new page.”

“We expect an uplift in metrics.”

Single Metric Focus

“I’d just look at conversion rate.”

“Conversion is the only thing that matters here.”

“If conversion goes up, we ship.”

No Risk Discussion

No mention of:

Revenue impact

User quality

Long-term retention

No guardrails

🟢 Strong Signal: What strong candidates do

States a causal hypothesis

Product change → user behavior → business outcomeDefines metric roles clearly

Primary: decision metric (e.g., purchases per visitor)

Secondary: diagnostics (e.g., add-to-cart, CTR)

Guardrails: risk control (AOV, refunds, latency, retention)

Explicitly discusses trade-offs

Higher conversion vs lower AOV, speed vs comprehension

Why it matters

Shows structured thinking and awareness that optimizing one metric can hurt another.

Shameless Plugs:

Many of the questions during the session pointed to the same gap: candidates often understand A/B testing concepts, but struggle to apply them in real, decision-driven scenarios.

To address this, PrepVector runs three focused programs.

A/B Testing Course for Data Scientists and Product Managers

Learn how top product data scientists frame hypotheses, pick the right metrics, and turn A/B test results into product decisions. This course combines product thinking, experimentation design, and storytelling—skills that set apart analysts who influence roadmaps.

Advanced A/B Testing for Data Scientists

Master the experimentation frameworks used by leading tech teams. Learn to design powerful tests, analyze results with statistical rigor, and translate insights into product growth. A hands-on program for data scientists ready to influence strategy through experimentation.

Master Product Sense and AB Testing, and learn to use statistical methods to drive product growth. I focus on inculcating a problem-solving mindset, and application of data-driven strategies, including A/B Testing, ML, and Causal Inference, to drive product growth.

We’re currently offering limited scholarships across these programs. The codes apply across the courses mentioned above and expire on 26th, January. Course links and discount codes are shared at the end.

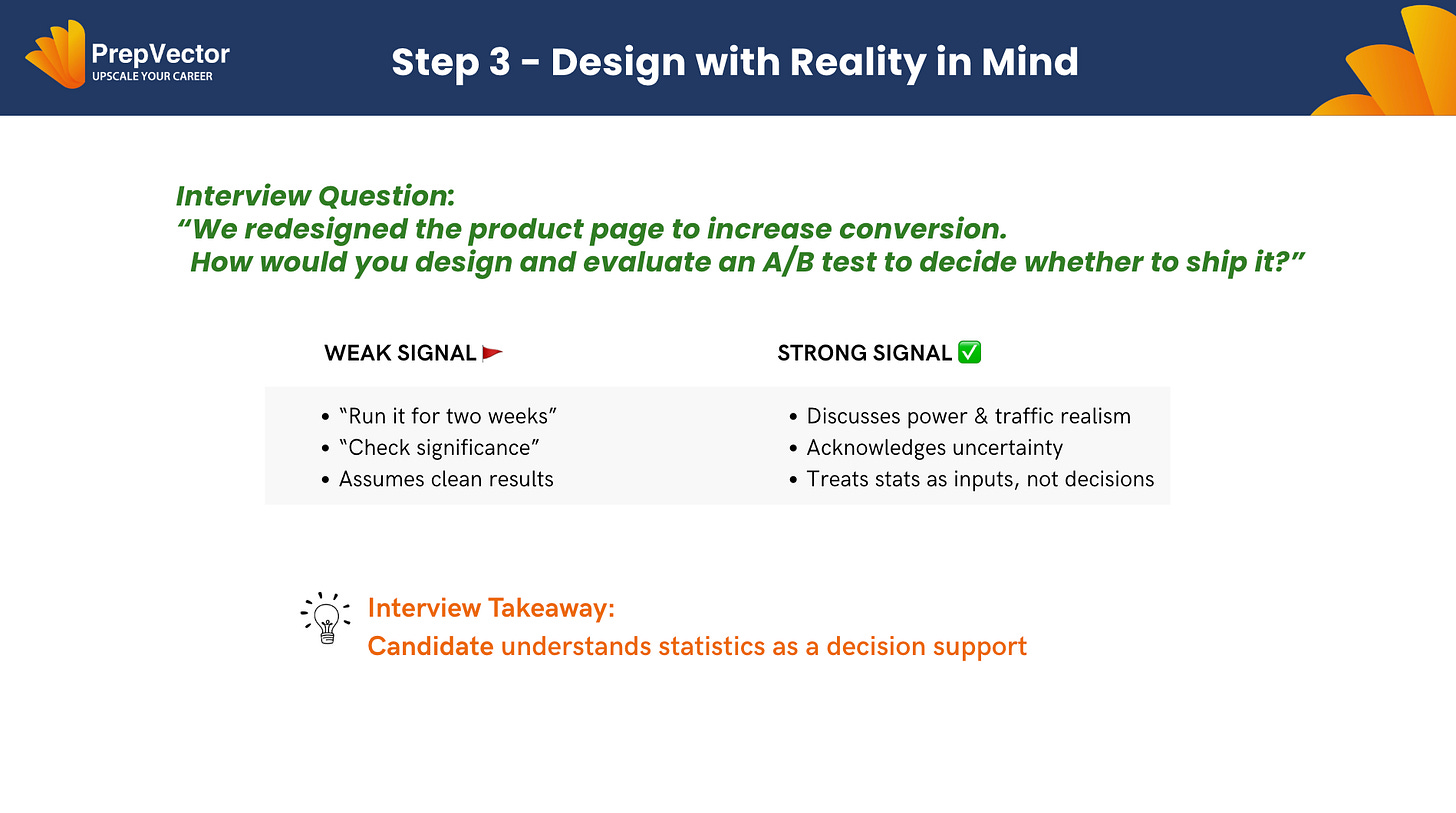

3. Design the Experiment With Reality in Mind

This step focuses on how the experiment would actually run in the real world. Strong candidates treat experimental design as a balance between statistical rigor and practical constraints.

🔴Weak Signal:

Run it for two weeks

“I’d run the experiment for two weeks.”

“Two weeks should be enough to see results.”

“Check significance”

“If it’s statistically significant, we ship.”

“I’d check if p < 0.05.”

Assumes Clean Results

No mention of:

Inconclusive outcomes

Noise or variance

🟢 Strong Signal: What strong candidates do

Does not hide behind “run for two weeks” or “p < 0.05”

Acknowledges noise, variance, and inconclusive outcomes

Interprets results through trade-offs

Conversion up, revenue flat → possible quality riskMakes a recommendation

Ship with guardrails, limited rollout, iterate, or hold

Why it matters

Demonstrates judgment under uncertainty — not checkbox experimentation.

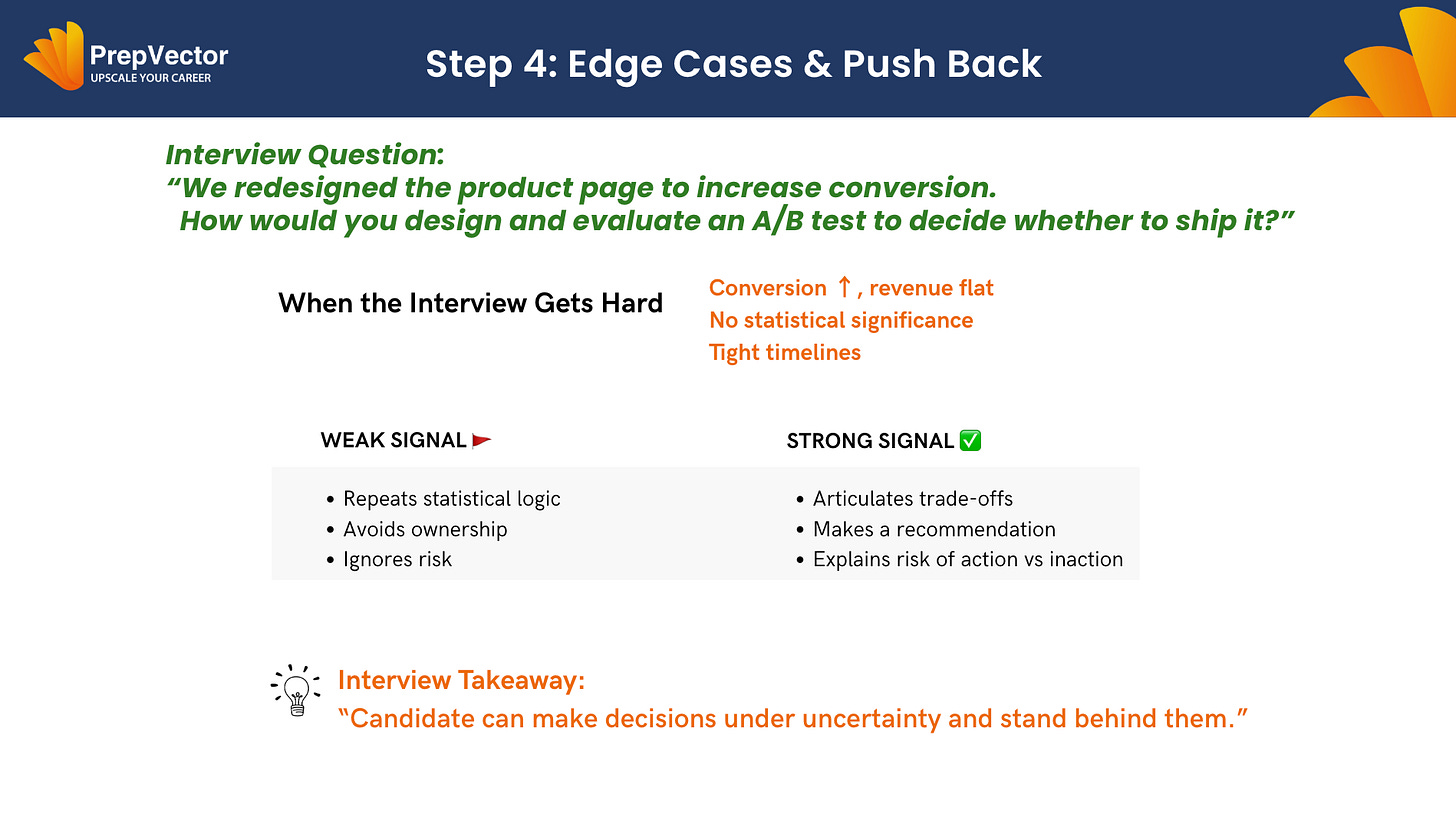

4. Handle Edge Cases and Pushback

Real experiments rarely produce clean, comfortable results. Interviewers deliberately push candidates into edge cases to see how they respond. This step reveals whether a candidate can think clearly under pressure and ambiguity.

🔴Weak Signal:

Hides Behind Statistics

“Since it’s not statistically significant, we shouldn’t ship.”

“I’d wait until we reach significance.”

Treats Stats as a Verdict

“p > 0.05 means the experiment failed.”

“The confidence interval crosses zero.”

Avoids Ownership

“I’d share the results with the PM and let them decide.”

“It depends on business context.”

Defers the Decision

No clear stance on:

Ship

Hold

Partial rollout

🟢 Strong Signal: What a hireable candidate does

Treats statistics as an input, not a verdict

Takes ownership instead of deferring

Partners in the decisionExplains risk of action vs inaction

Upside left on the table vs downside at scaleCommits to a direction — with conditions

Why it matters

Signals production experience and decision-making maturity.

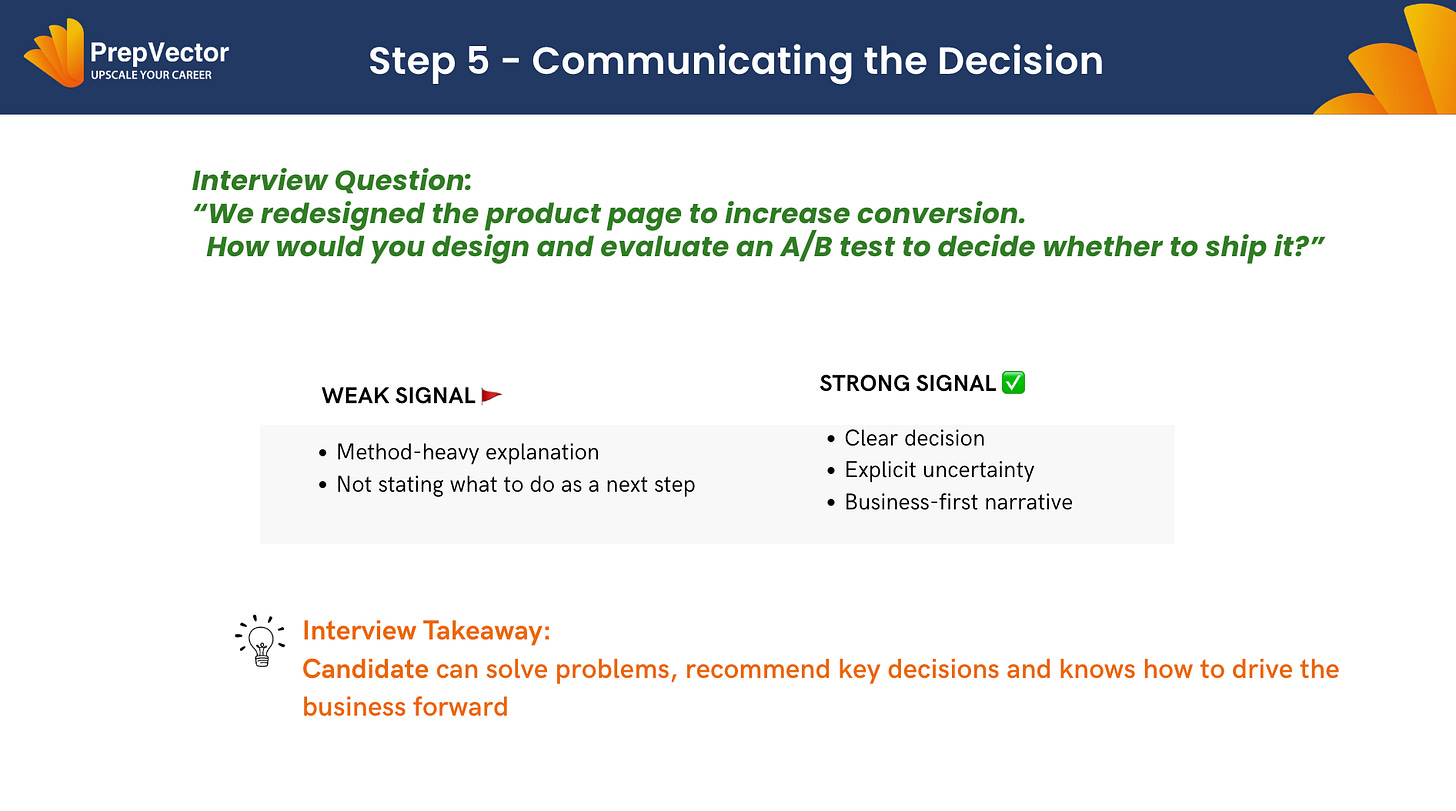

5. Communicate the Decision Clearly

The final step is often the most overlooked. An experiment is only valuable if it leads to a clear decision.

🔴Weak Signal:

Method-Heavy Explanation

“We ran a t-test and got a p-value of 0.07.”

“The confidence interval crosses zero.”

“We used a 95% threshold.”

Lists Analysis Without Judgment

“Here are the results…”

“These are the metrics we looked at…”

No Clear Recommendation

Never states:

What to ship

What to hold

What to change

Avoids Commitment

Defaults to:

“We need more data.”

“Let’s wait.”

Weak answers - stop at methodology and fail to state what should be done next.

🟢 Strong Signal: What a hireable candidate does

Leads with the recommendation

“I recommend a limited rollout…”States uncertainty calmly and precisely

Directional lift, limited power, scale riskUses a business-first narrative

Revenue protection vs growth, risk asymmetry

Why it matters

Senior candidates decide, explain, and own the outcome.

At its core, an A/B testing interview is not about running experiments — it’s about making decisions under uncertainty. Candidates who demonstrate structured reasoning, product judgment, and confidence in their recommendations consistently stand out, long before any formulas come into play.

Want to Go Deeper? Learning Paths and Discounts!

If this framework resonates, PrepVector offers hands-on programs designed to help you apply this thinking — not just learn concepts.

To make this easier to navigate, we’ve structured the programs into two clear learning paths based on your goals and time commitment.

Learning Path 1: Become an AB Testing Expert

Best suited if you want to strengthen your experimentation skills end to end.

This path includes:

Course 1: A/B Testing Course for Data Scientists & Product Managers

Discount code: AB101

Course 2: Advanced A/B Testing for Data Scientists

Discount code: AB201

Learning Path 2: Crack Product Data Science Interviews

Designed for those who want to go beyond experimentation and build a broader product data science skill set.

This path includes:

Course 1: A/B Testing Course for Data Scientists & Product Managers

Discount code: AB101

Course 2: Advanced A/B Testing for Data Scientists

Discount code: AB201

Course 3: Product Data Science Bootcamp

Discount code: PDS900

🚨 The discount codes shared above are valid until Jan 26, 11:59 PM PST.

Not sure which course aligns with your goals? Send me a message on LinkedIn with your background and aspirations, and I’ll help you find the best fit for your journey.